Unsere vernetzte Welt verstehen

Wie YouTube die rechte Szene zusammenbringt

Mit über 1,5 Milliarden Nutzern pro Monat ist YouTube die größte Social Media Plattform der Welt. Sie dient zudem als Kommunikations- und Informations-Rückgrat für die Rechtsextremen in den Vereinigten Staaten. In diesem Blogartikel berichten Jonas Kaiser, assoziierter Forscher am HIIG und Adrian Rauchfleisch, Assistenzprofessor an der Nationaluniversität Taiwan, über einige vorläufige Ergebnisse ihrer Analyse von 13.529 YouTube-Kanälen. Diese zeigt, wie der Empfehlungsalgorithmus von YouTube zur Bildung einer rechtsextremen Filterblase beiträgt.

In the aftermath of the shooting at Marjory Stoneman Douglas High School in Parkland, Florida, a conspiracy theory arose. It suggested that the outspoken students calling for gun control were not victims, but rather “crisis actors,” an allegation that has been around since the Civil War in the United States. This theory quickly made the rounds on conspiracy and far-right activity on YouTube. For the the far-right channel owners, YouTube is more than just another platform: it is their informational battlefield, the place where they can post seemingly whatever they want, find support, unite around a shared message, and potentially catch the media’s attention. In our analysis, we show that YouTube’s recommendation algorithms actively contribute to the rise and unification of the far-right.

For far-right media makers and their subscribers, including channel creators, talking heads, or so-called produsers, YouTube is the backbone of much of their communication efforts. It combines radio talk shows, video, and the possibility to talk about everything the “mainstream” media won’t cover. It is their “alternative media network, a hybrid counterculture of entertainers, journalists, and commentators” (Forthcoming from Data and Society 2018). It is an informational cornerstone for many unfounded conspiracy theories, ranging from Pizzagate to Uranium One to QAnon. It is a place where they can connect with each other, talk, exchange opinions, discuss events like Charlottesville or the Parkland shooting, mobilise their base, and recruit new members.

The Far-right on YouTube

When the head of InfoWars, Alex Jones, goes live on YouTube to promote conspiracy theories or far-right talking points, he reaches over 2.2 million subscribers. His most popular videos have over 10 million views. When the shaken parents, teachers, and students spoke out after the shooting at Sandy Hook Elementary School and Parkland, Jones denounced them as “crisis actors,” a group of paid government agents who routinely stage elaborate events to divert attention from the government’s true intentions. On YouTube, Alex Jones is a beacon among conservative, conspiracy, and far-right channels. He has a large devoted fan base that shares his content across multiple platforms, which makes him a ubiquitous figure in the far-right alternative media network.

When talking about online misinformation, bots, echo chambers, filter bubbles, and all the other concepts that might make anyone fear for our democracies, we usually talk about Twitter and Facebook. Much less discussed is the 1.5-billion-users-in-a-month social media behemoth YouTube, the 2nd most visited website in the US and worldwide, which US teens use much more frequently than Facebook or Twitter, and which is increasingly also being used as a news source. Recently, a Guardian piece shed some light into the problematic function of YouTube’s video recommendation. Zeynep Tufekci even called YouTube “the Great Radicalizer”. While watching one of Alex Jones’ videos won’t radicalise you, subscribing to Alex Jones’ channel and following the ever-more-radical recommendations that YouTube throws your way might. This radicalisation is bolstered by recommended videos, that can take a viewer down an ever more radical rabbit hole. But the most powerful mechanism might be subscriptions, which let users curate their own homepages that consistently reaffirm a particular political worldview.

Update on the topic:

Alex Jones‘ channel was deleted

The Method

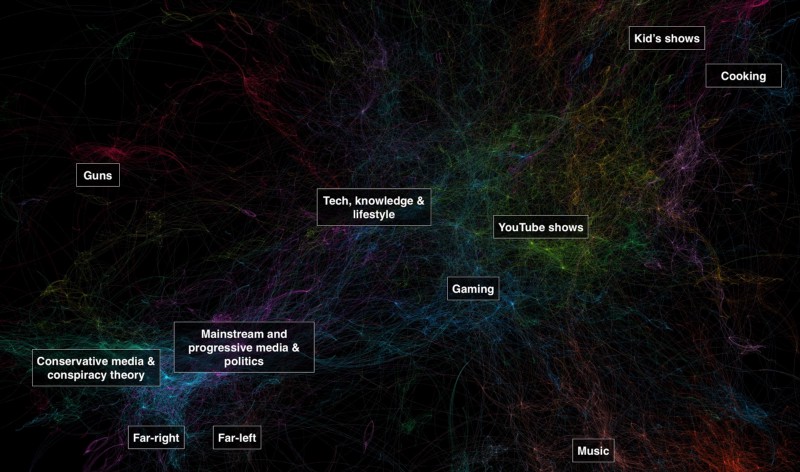

Fig 1. YouTube channel network with labelled communities (node size = indegree / amount of recommendations within network; community identification with Louvaine)

We followed YouTube’s recommendation algorithm wherever it took us. We started off with 329 channels from the right, 543 from political parties and politicians, the top 250 US mainstream channels (from Socialblade.com), and 234 channels from the left. This initial list was based on what scholars, the Southern Poverty Law Center, journalists, as well as users on Reddit threads and Quora labelled as right or left. In our next step, we then collected all channels that these 1,356 channels recommended themselves on their channel page, an addition of 2,977 channels. Next, we conducted a snowball method: we followed YouTube’s channel recommendations for our starting set in three steps. With each step the amount of channels got bigger. This way, we ended up with 13,529 channels. We then visualised these results to create a map of YouTube’s universe. Every channel is a node, every recommendation from one channel to another is an edge.

YouTube’s Galaxies

There are some general observations: we can see the mainstream island in the middle of the map; it consists of Let’s Players (i.e. people that play video games on YouTube), pranksters, YouTube personalities, cat videos, or late night shows. South from that, is the music ecosystem with Justin Bieber, Katy Perry, and Rihanna. To the north-east from the mainstream is the kid’s entertainment section. West from the mainstream are the political communities. Here, the liberal and progressive community (purple) is clustered around The Young Turks. In these communities are media outlets like CNN but also the channels from Barack Obama, Elizabeth Warren, and Bernie Sanders. This community is closely connected to two other political ones that we labelled the “far-left” and the “far-right.” While the far-left consists of communist, socialist or anarchist channels, the far-right includes the so-called “alt-right” as well as white supremacists. But we can also see that the far-left is not particularly big and while they connect to the liberal and progressive community, the connection is not mutual: the far-left on YouTube is not very visible when you follow the platform’s recommendation algorithms.

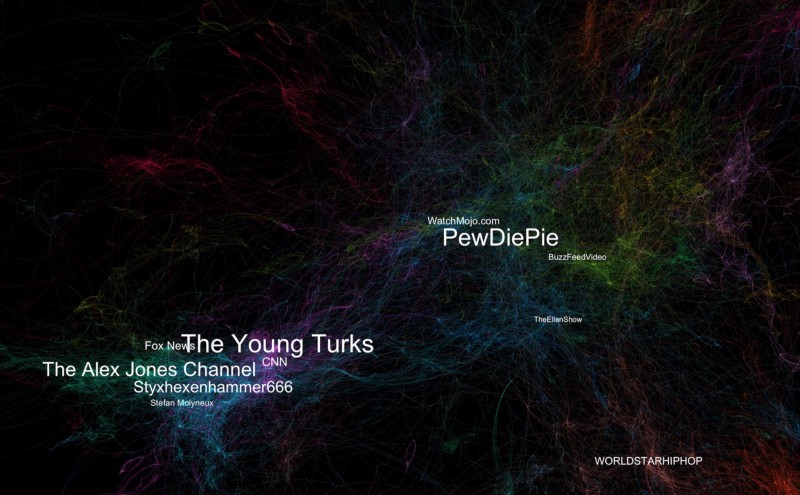

For comparison: YouTube’s most successful gamer and personality, Pewdiepie, has over 60 million subscribers. Justin Bieber has 33 million. Superwoman has 13 million. Simon’s Cat has 4. Stephen Colbert has 4. Meanwhile The Alex Jones channel has 2 million subscribers. And although this seems comparatively low, the question is compared to what. Barack Obama’s channel has 552k subscribers, Donald Trump’s has 112k, the official GOP channel has 38k, the official Democratic channel has 9k. Within the political communities, Alex Jones’ channel is one of the most recommended ones and as we will show, it connects the two right-wing communities.

Fig 2. YouTube channel network with selected channel names (node size = indegree / amount of recommendations within network; community identification with Louvaine)

Locating the Far-right

Our algorithm has identified two closely connected communities that discuss right-wing politics. The first community is closer to mainstream conservative politics. Although prominent politicians from the GOP and the GOP channel itself are part of this community, they are, by YouTube’s metrics (subscribers, views, etc.), not popular. The channels in this community that are the biggest (that is highly recommended) are Fox News, BPEarthWatch, and Alex Jones. Even as the more “mainstream” cluster of right-wing politics, this community that YouTube’s algorithms creates ranges from conservative media, politicians, to conspiracy theorists.

We consider the second community the far-right. It ranges from right-wing extremists to “Red Pillers.” For example, it includes so-called “alt-right” personalities like Richard Spencer or Brittany Pettibone, but also David Duke and Styxhexenhammer666. It also is not limited to the US; it includes right-wing extremists from Europe.

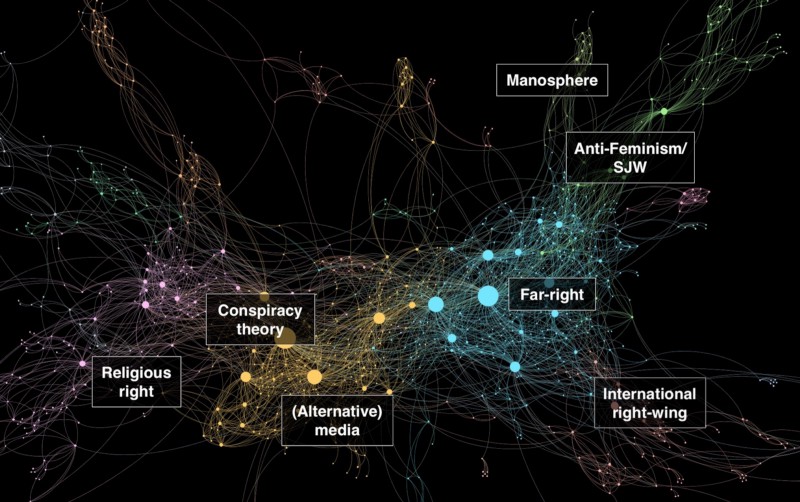

Fig 3. Right-wing channel network with labelled communities (node size = indegree / amount of recommendations within network; community identification with Louvaine)

Next we focus on the two right-wing communities and how YouTube connects them (Fig. 3). We are able to identify several distinct communities with a community detection algorithm, but we see more of the same: YouTube lumps Fox News and GOP accounts into the same community as conspiracy theory channels like Alex Jones. As the most recommended channels in that community were media outlets, we labelled it “(Alternative) media.” We labelled the other central community “far-right”, as it consists of “alt-right” and “alt-light” channels as well as white nationalists. In addition, there is also a community that focuses on conspiracy theories, one that mostly publishes videos against political correctness or feminists, and the Manosphere. There are also clusters of the religious right and the international right-wing. Indeed, some communities are more closely connected than others, but for YouTube’s recommended system, they all belong in the same bucket. This is highly problematic for two reasons, because it suggests that being a conservative on YouTube means that you’re only one or two clicks away from extreme far-right channels, conspiracy theories, and radicalising content. In addition, YouTube’s algorithm here contributes to what Eli Pariser called a “filter bubble,” that is, an algorithmically created bubble that “alters the way we encounter ideas and information.”

The Algorithmic Thomas Theorem

No one knows what exactly goes into YouTube’s channel recommendations. It is safe to assume that factors that contribute to it are users’ watch histories, preferences, overlap, as well as channel owner recommendations. When we applied our method for the German case, we were able to identify a YouTube-created right-wing filter bubble. Think of it this way: YouTube’s algorithms are not creating something that is not already there. These channels exist, they interact, their users overlap to a certain degree. YouTube’s algorithm, however, connects them visibly via recommendations. It is, in this sense, an algorithmic version of the Thomas theorem, which famously suggested that “If men define situations as real, they are real in their consequences”. We could thus say that if algorithms define situations as real, that they are real in their consequences. And we might add: and potentially shape future user’s behaviour. As our data shows, the channel recommendation connects diverse channels that might be more isolated without the influence of the algorithm, and thus helps to unite the right.

Indeed, the far-right in the United States is rather fragmented, which can be traced to the very different groups within the far-right (Zhou et al., for example, identify militia, white supremacy, Christian identity, Eco-terrorism, and Neoconfederate clusters) and, thus, very different topics and priorities. While we can still identify some of these communities, YouTube’s algorithm pushes many channels towards the gravitational center of a larger right-wing bubble: highly recommended channels such as Alex Jones or Styxhexenhammer666 keep this right-wing bubble connected. Since the so-called “alt-right” burst on the far-right scene, they have come to act as a bridge between those groups mentioned above. In much the same way that the “Unite the Right” rally in Charlottesville, where a white supremacist injured many and killed Heather Heyer by driving a car into a crowd of counter-protesters, sought to bring together many far-right influencers, so too does YouTube’s recommendation algorithm bring together far-right channels.

Postscript: YouTube’s Responsibility

Although YouTube stated that they adjusted their algorithm in 2016, 2017, and 2018, the questions are: what did change? Do they even see a problem in our findings? And more importantly: do they think they are responsible? After all, their system “works,” right? If you want gaming videos, you get more gaming videos. If you want rock music, YouTube will recommend more rock music. And if you’re into the far-right, YouTube, too, has something for you, even conspiracy theories. This calls into question the responsibility of YouTube and its algorithmic pre-selection that might lead to more radicalisation. So at least with regard to the last question, it seemed like YouTube would change after the Parkland shooting: It was reported that some right-wing channels have been banned, and that Alex Jones’ videos have received two strikes. One more strike would mean the ban of the channel. And Alex Jones even suggested his main channel was frozen and about to be banned. Alas, this turned out to be a hoax (most likely to promote his new channel), and it also turned out that some of these bans and strikes on right-wing channels were mistakes by newly hired moderators. As a result, the decisions of the content moderators got reversed, but the algorithm that has helped to unite the far-right remains.

Dieser Artikel erschien zuerst auf dem Media Manipulation Blog von Data & Society.

Dieser Beitrag spiegelt die Meinung der Autor*innen und weder notwendigerweise noch ausschließlich die Meinung des Institutes wider. Für mehr Informationen zu den Inhalten dieser Beiträge und den assoziierten Forschungsprojekten kontaktieren Sie bitte info@hiig.de

Jetzt anmelden und die neuesten Blogartikel einmal im Monat per Newsletter erhalten.

Themen im Fokus

Der Human in the Loop bei der automatisierten Kreditvergabe – Menschliche Expertise für größere Fairness

Wie fair sind automatisierte Kreditentscheidungen? Wann ist menschliche Expertise unverzichtbar?

Gründen mit Wirkung: Für digitale Unternehmer*innen, die Gesellschaft positiv gestalten wollen

Impact Entrepreneurship braucht mehr als Technologie. Wie entwickeln wir digitale Lösungen mit Wirkung?

Bias erkennen, Verantwortung übernehmen: Kritische Perspektiven auf KI und Datenqualität in der Hochschulbildung

KI verändert Hochschule. Der Artikel erklärt, wie Bias entsteht und warum es eine kritische Haltung braucht.