Making sense of our connected world

Between experimentation spaces and traffic light systems: Negotiating artificial intelligence at the company level

Systems based on artificial intelligence (AI) have advanced into many areas of the world of work. That said, how can the introduction of such systems be aligned with the rights and interests of workers? This is what management and worker representatives are negotiating at the company level.

In the media, vendors of AI systems like to portray them as uncontrollable. However, they are actually far from it: Depending on how developers and organisations design and use Artificial Intelligence, the systems can have a positive or negative impact on the workplace. That is why a number of governments around the world have set out to regulate the use of AI. After all, AI is not an uncontrollable or neutral force: AI is political.

Currently, the AI Act is being negotiated in the European Union. Negotiations on the use of AI are meanwhile also taking place within organisations. Here, works councils play a key role in safeguarding the interests of workers and ensuring that AI systems improve working conditions rather than making them worse. However, a practical and constructive negotiation of AI beyond the media-generated hype challenges not only political but also organisational actors. Our interviews with worker representatives show that while AI presents them with new challenges, they also find new ways of dealing with them in negotiations at the company level.

Artificial Intelligence presents new challenges for the representation of workers’ interests

One source of uncertainty in negotiations of AI stems from the fact that its use is not initially visible to workers, as it involves software solutions that are sometimes integrated into standard packages. In addition, vendors of AI systems often refer to trade secrets and do not disclose details about the functionalities of the systems they offer. Management also contributes to this lack of transparency by not providing employees and their representatives with sufficient information about AI projects in the organisation.

At the same time, various technologies are often negotiated under the label AI. This is because AI does not refer to a specific technology, but is an umbrella term that describes the “frontier of computational advancements”. Even though AI is nowadays closely related to methods of machine learning, it is a challenge to discuss regulation and negotiate under the controversial term AI. Not only does the conceptual debate challenge AI regulation, but also the lack of geographical boundaries of AI. Often, AI projects are of a global nature, thus negotiation partners of worker representatives are based abroad and unfamiliar with the provisions of German co-determination.

A constructive debate on the necessity of AI use as well as an assessment of its effects on workers is further impeded by the polarised media discourse. AI is portrayed both as “unstoppable progress” that will safeguard the competitiveness of companies and as a threat to society and the workplace. In some cases, it is the same actors – the technology providers themselves – who publicly represent both positions. When regulating and negotiating AI in organisations, it is important to critically question this hype.

Negotiating Artificial Intelligence in the interest of workers

Worker representatives are approaching AI negotiations by developing and testing new negotiation strategies. This includes, for example, flexibilizing regulations to a certain extent by agreeing to clearly defined and bounded pilot projects or “experimention spaces”. The vagueness of the concept of AI also means that technical details play a secondary role in regulations. Instead, the effects of AI systems as well as the goals and purposes of their implementation are foregrounded in agreements.

At the same time, however, negotiations and regulations on AI are also highly structured. In some organisations, new processes and instruments are introduced, such as “traffic light systems”, which classify AI systems according to their criticality and specify the further procedure based on this classification. The result can be, for example, that an AI system may be introduced without a separate regulation, that it must be reviewed in a special way, or that it may not be introduced at all. Checklists and AI project profiles also help to structure the negotiations.

Finally, it is important for worker representatives to network when negotiating AI. A shared long-term vision for working with AI – for example in the form of guidelines or principles – creates the basis for internal collaboration. External experts, researchers, or AI-experienced worker representatives from other organisations can support the development of this vision and the evaluation of AI projects.

Worker representatives shaping the future of work

Worker representatives will continue to play an important role in shaping AI projects in the interests of workers. The increasing technological complexity of systems that worker representatives negotiate requires many resources. If these are unavailable, co-determination can be undermined by overburdening worker representatives. Current discussions around generative AI and its regulatory challenges underline the need to explore the organisational negotiation of AI further. However, the narratives of technology providers should be critically questioned and the focus should be on the real risks and potentials of AI instead of the hypothetical ones.

This article is shortened and was previously published on the WZB blog on 27.09.23. It is based on findings from an interview study with worker representatives and was presented at the conference Digitalisation, Work and Society in the Post-Pandemic Constellation. For more information, see the discussion paper AI and Workplace Co-determination and the handbook AI in Knowledge Work.

This article includes findings from projects for which the author received funding from the Federal Ministry of Labor and Social Affairs and the Hans Böckler Foundation.

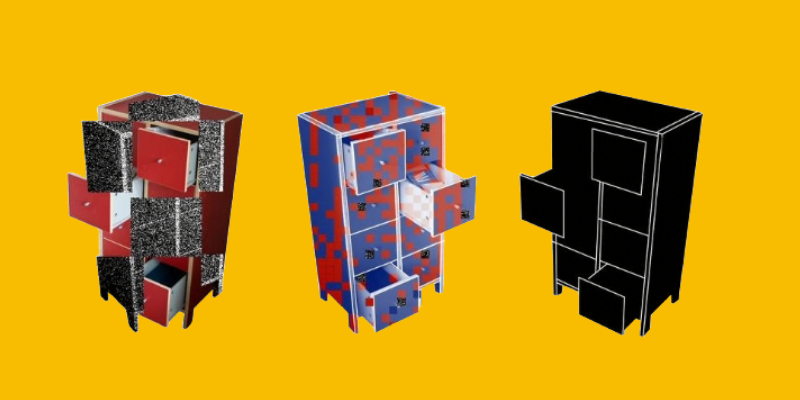

Image credit: Anton Grabolle / Better Images of AI / Classification Cupboard / CC-BY 4.0

This post represents the view of the author and does not necessarily represent the view of the institute itself. For more information about the topics of these articles and associated research projects, please contact info@hiig.de.

You will receive our latest blog articles once a month in a newsletter.

Digital future of the workplace

Escaping the digitalisation backlog: data governance puts cities and municipalities in the digital fast lane

The Data Governance Guide empowers cities to develop data-driven services that serve citizens effectively.

Online echoes: the Tagesschau in Einfacher Sprache

How is the Tagesschau in Einfacher Sprache perceived? This analysis of Reddit comments reveals how the new simplified format news is discussed online.

Opportunities to combat loneliness: How care facilities are connecting neighborhoods

Can digital tools help combat loneliness in old age? Care facilities are rethinking their role as inclusive, connected places in the community.