Making sense of our connected world

Blind spot sustainability: Making AI’s environmental impact measurable

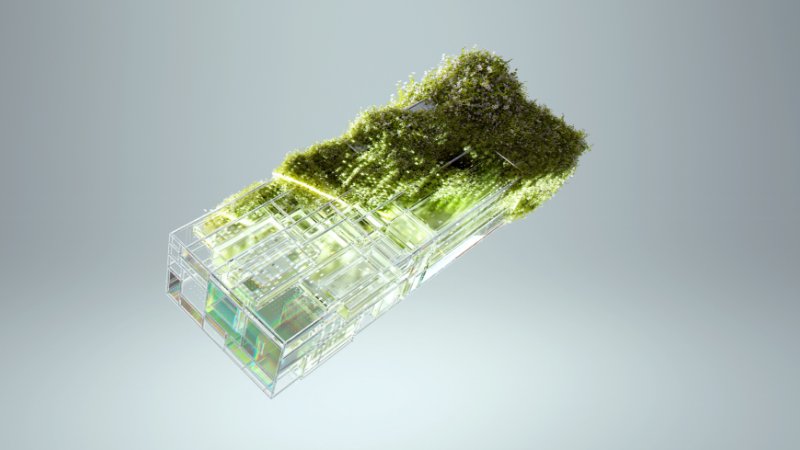

Efficient, smart and environmentally friendly? Artificial intelligence is often hailed as the solution to many of the major challenges we face today, including climate change. However, behind this optimistic vision of the future lies a blind spot: AI consumes vast quantities of energy, produces CO₂ emissions, and its environmental footprint remains largely opaque. However, reliable data, suitable measurement methods and binding standards are still lacking. This article explains what needs to change in order to assess the impact of AI.

Every stage of an AI system’s life cycle demands vast resources: from hardware manufacturing and data centre construction to the development and training of AI models and their subsequent use. At the end of this chain lies heaps of outdated hardware e-waste. All of these steps require rare earths, energy and water, and must be included in AI sustainability assessments (Smith & Adams, 2024).

It’s important to note that sustainability has many facets – ecological, social and economic. This blog post focuses on environmental protection – in other words, on the ecological dimension. It’s about how we can conserve resources and preserve nature.

AI and ecological sustainability — What we (don’t) know

For a long time, AI was seen as a technological beacon for the green transition. Increasingly, however, its substantial environmental impact is being discussed in more critical terms. We highlighted how the public discourse around AI is shifting in Germany already in our Digital Society Blog (Liebig, 2024).

Little is known about AI’s actual total resource consumption. Concrete data and figures remain scarce, often kept under wraps. Major AI providers and data centre operators – over a third of which are based in the US (Hajonides et al., 2025) – like Google or Meta, offer little transparency about their actual usage. As a result, AI’s ecological footprint can currently only be estimated rather than precisely measured (Smith & Adams, 2024). To make matters worse, we lack standardised methods to reliably assess AI’s environmental impacts across its entire life cycle (Kaack et al., 2022). A comprehensive evaluation must include both resource consumption and resulting emissions, such as those from power generation to run data centres (Smith & Adams, 2024).

AI for environmental protection?

A simplistic black-and-white view of AI isn’t helpful here. There are projects that deliberately use AI to protect the environment. On our Digital Society Blog, we’ve presented such examples. For instance, AI can help detect leaks in wastewater systems, thus protecting drinking water and ecosystems from contamination. It can also be used to identify and preserve habitats of endangered species (Kühnlein & Lübbert, 2024).

But these projects also rely on the same resource-intensive technologies. This makes it difficult to gauge their actual environmental benefit. We lack robust data to assess the environmental gains versus the resources consumed over the entire life cycle. So, do the trade-offs render these solutions unsustainable?

Making AI’s environmental impact measurable

This is where the new research project Impact AI: Evaluating the impact of AI for sustainability and public interest comes in. The project is run by the Alexander von Humboldt Institute for Internet and Society (HIIG) in collaboration with Greenpeace and the Economy for the Common Good. Over five years, the project will examine 15 AI initiatives from various sectors. Its goal: to systematically and holistically assess their real impact on society and the environment. A new methodology is being developed that combines indicators such as energy efficiency and AI-generated emissions with a qualitative evaluation of ethical and social dimensions. This approach aims to make both the sustainability of AI and sustainability through AI visible. It helps identify the potential and strengths as well as the limitations of AI projects that seek to contribute to sustainability and the public interest.

Both in terms of evaluating how sustainable AI systems themselves are, and their contribution to environmental goals, there’s still a lack of clear data or criteria. This presents a challenge not only for conscientious end users but particularly for organisations aiming to develop AI in a responsible and sustainable way.

What does sustainable AI look like?

How can we align AI with ecological sustainability? Initial ideas were developed during a workshop at the conference Yes, we are open?! Designing Responsible Artificial Intelligence, organised by the Berlin University Alliance, the University of Vienna, Wikimedia, the Weizenbaum Institute, and the HIIG. The event focused on the intersection between open knowledge, AI and science. A key question: To what extent does open access to research findings and data influence fair and sustainable AI development?

In a discussion moderated by HIIG, participants from academia, civil society and NGOs jointly formulated policy recommendations aimed at advancing the discourse on AI’s ecological responsibility.

A monitor for greater awareness?

One such recommendation focused onAI systems’ resource consumption: How can it be made more transparent – especially for users who want to weigh the benefits of AI use against its environmental costs? Would people use ChatGPT or other AI tools as frequently if they knew that a single chatbot conversation can consume up to 500 ml of water (Li et al., 2023)?

This kind of direct feedback – similar to the “flight shame” phenomenon – could encourage a more critical perspective on individual AI use. However, for people who rely on AI in their daily work – to be more productive, generate content faster or automate decisions – there may be little real choice to opt out.

Individualising the problem, however, risks shifting the burden. It foregrounds users’ responsibility for AI sustainability, while structural levers, such resource consumption disclosure or environmental protection enforcement, remain in the background.

So, consumption monitoring may not be a silver bullet, but it’s a tool to raise awareness about the link between AI use and resource demand. And that awareness is a critical foundation for moving the public debate on AI’s ecological consequences forward.

The elephant in the room: Lack of transparency, missing data

Developing an accurate consumption monitor still faces one major hurdle: a lack of reliable data and transparency about AI’s environmental effects.

The discussion group quickly reached consensus that independent measurement is needed. A key lever: greater insight into the data centres that run AI systems. How much computing power is used for AI? Where are the servers located? What are the energy sources? How much water is consumed? Most of these questions remain unanswered, simply because operators don’t disclose the data – yet.

The Data Centre Registry created under the EU Energy Efficiency Directive holds enormous potential. It aims to establish a European database for data centres. In Germany, operators are now required to register and annually report information on energy use and heat recovery to the Federal Ministry for Economic Affairs and Climate Action. However, it’s still unclear how much of that computing power goes specifically to AI.

Thus, calls for comprehensive reporting and documentation standards persist. These must be uniform and holistic to assess and compare environmental impacts across AI’s life cycle. Moreover, measurement must not be left to the industry alone to self-monitor. To avoid greenwashing, independent or public entities must oversee those assessments.

AI policy is sustainability policy

To implement these demands, a change in mindset is necessary. The risk posed to ecological sustainability by AI must be recognised by policymakers. High-resource AI systems raise questions of responsibility – and that makes AI policy also environmental policy.

What could legislators do? Existing environmental assessment tools and incentive systems could be expanded and applied to AI. This includes comprehensive life-cycle assessments for digital services. Appropriate tools are already available in the construction industry. But to do this, the entire digital supply chain – everything required to provide AI systems – must be disclosed and factored in. Additionally, carbon pricing ought to be extended to digital services, especially those provided outside Europe. That way, emissions from non-European data centres would also be accounted for. While mechanisms for carbon border adjustment exist within the EU, they currently only apply to products like steel or fertilizers.

Looking ahead

There was also a sense of frustration in the discussion. Many participants criticised the slow pace of political processes and the lack of serious sustainability thinking in AI deployment. One question came up over and over: How can individuals and organisations take responsibility themselves?

Yet, there a feeling of momentum also presided. Participants were motivated to jointly push for more transparency in AI’s environmental impact. The idea is to strengthen public discourse and ensure that AI and environmental policy are increasingly seen as interconnected. Science can play a key role here by developing better methods to evaluate AI’s resource use and making them accessible.

From this shared concern, a new network has emerged: “AI and Sustainability”. Researchers and civil society representatives have come together to regularly exchange ideas, critically monitor developments and propose concrete actions. Their goal: to place AI’s ecological responsibility permanently on the political and societal agenda — not someday, but now.

References

Hajonides, J., McCarthy, J., Koulouri, K., & Camargo, R. (2025) Navigating AI´s Thirst in a Water-Scarce World. A Governance Agenda for AI and the Environment. NatureFinance. https://www.naturefinance.net/resources-tools/navigating-ais-thirst-in-a-water-scarce-world/

Kaack, L. H., Donti, P. L., Strubell, E., Kamiya, G., Creutzig, F., & Rolnick, D. (2022). Aligning artificial intelligence with climate change mitigation. Nature Climate Change, 12(6), Article 6. https://doi.org/10.1038/s41558-022-01377-7.

Kühnlein, I., & Lübbert B. ( 2024). Ein kleiner Teil von vielen – KI für den Umweltschutz. Digital Society Blog. 10.5281/zenodo.13221001.

Li, P., Yang, J., Islam, M. A., & Ren, S. (2023). Making AI Less „Thirsty“: Uncovering and Addressing the Secret Water Footprint of AI Models. arXiv. http://arxiv.org/abs/2304.03271.

Liebig, L. (2024). Zwischen Vision und Realität: Diskurse über nachhaltige KI in Deutschland. Digital Society Blog. doi: 10.5281/zenodo.14044890.

Smith, H., & Adams, C. (2024). Thinking about using AI?. Here’s what you can and (probably) can’t change about its environmental impact. Greenweb Foundation. Retrieved 14.04.2024, from https://www.thegreenwebfoundation.org/publications/report-ai-environmental-impact/

This post represents the view of the author and does not necessarily represent the view of the institute itself. For more information about the topics of these articles and associated research projects, please contact info@hiig.de.

You will receive our latest blog articles once a month in a newsletter.

Digitalisation and sustainability

Artificial intelligence and society

The Human in the Loop in automated credit lending – Human expertise for greater fairness

How fair is automated credit lending? Where is human expertise essential?

Impactful by design: For digital entrepreneurs driven to create positive societal impact

How impact entrepreneurs can shape digital innovation to build technologies that create meaningful and lasting societal change.

Identifying bias, taking responsibility: Critical perspectives on AI and data quality in higher education

AI is changing higher education. This article explores the risks of bias and why we need a critical approach.