Making sense of our connected world

AI – A metaphor or the seed of personality of machines in a digitised society?

Is artificial intelligence a metaphor, or can machines be intelligent in the same way human beings are? This has been a contested question ever since the concept was developed. While the so-called weak AI thesis has treated it as a metaphor, the strong AI thesis does take intelligence literally. The answer to this question might point to the future role of intelligent machines in the digital society. This article is part of an ongoing series on the politics of metaphors in the Digital Society. The series is edited by Christian Katzenbach (HIIG) and Stefan Larsson (Lund University Internet Institute).

Dossier: How metaphors shape the digital society

Will computers, robots and machines one day be considered intelligent persons? Will automatic agents imbued with artificial intelligence become members of our society? The concept of personality is fluid. There were times when slaves had no personality rights. Recently, there has been a growing movement arguing to confer legal personality upon animals. Hence, our concept of personality might change in the course of digitisation of society. This might be due to advances in artificial intelligence, but also to the way the term is framed.

Is Artificial Intelligence a metaphor or a descriptive concept? This question cannot be answered in one way or another as there is a semantic struggle surrounding the concept of artificial intelligence. As it will be shown, some people treat artificial intelligence rather as a broad metaphor for the ability of machines to solve specific problems. Others take it word for word and conceive of artificial intelligence as being the same as human intelligence. Some researchers go as far as to reject the concept completely. To shed more light on the issue, it is worthwhile to go back to the time the term was coined.

Historical Origins

Artificial intelligence was first used in 1956 in Dartmouth, New Hampshire, where John McCarthy, Claude Shannon and Marvin Minsky organised a six-week summer workshop supported by the Rockefeller foundation. They introduced their grant application in the following terms:

…The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.

Interestingly, even the organisers of the conference did not really approve of the term artificial intelligence. John McCarthy stated that “one of the reasons for inventing the term ‘artificial intelligence’ was to escape association with ‘cybernetics’”, as he did not agree with Norbert Wiener. Yet, the term artificial intelligence was used frequently. Today, it constitutes a subdiscipline of computer science.

The weak AI thesis vs the strong AI thesis

As can be seen from the statement above, there has always been an ambiguity to the term. This statement can be taken to mean that every aspect of human intelligence can be replicated. Yet, it can also be interpreted as a conjecture, as the use of the word simulate suggests artificial intelligence and humane intelligence remain different. The different interpretations of artificial intelligence have been conceptualised as the strong and weak AI thesis. The strong AI thesis suggests that such a simulation in fact replicates the mind and that there is nothing more to the mind than the processes simulated by the computer. On the contrary, the weak AI thesis suggests that machines can act as if they were intelligent. The weak AI thesis transfers the concept of intelligence to a context in which it normally would not apply. Therefore, the term intelligence is used in a metaphorical sense in the context of weak AI.

One of the active proponents of the concept of weak AI was Joseph Weizenbaum, a Jewish German-American computer scientist who was responsible for some important technical inventions, but who remained critical of the societal impacts of computers. He programmed the famous chatbot ELIZA (try here). Weizenbaum used a few formal rules for the chatbot to keep the conversation going. The chatbot analyzes sentence structure and grammar and rephrases the former phrase with a question or replies with a standard utterance.

The proponents of the thesis of strong artificial intelligence have tried to find ways to replicate processes in the brain, for example, by designing neural networks. The strong artificial intelligence thesis suggests that machines can be intelligent in the same way as human beings can. One of the proponents of the strong AI thesis, Klaus Haefner, once had an exchange with Weizenbaum. They use arguments that were already foreseen by Allan Turing in his seminal text “Computing, Machinery and Intelligence” from 1949. He famously replaced the question “Can machines think?” with an “imitation game”. In this game, the interrogator has a written conversation with one human being and one machine, both of which are in separate rooms. The task Turing describes is to design a machine that acts such that the interrogator cannot distinguish it from the human being based on its communication. Therefore, the goal is not to design a system that equals a human being, but one that acts in such a way that a human beings cannot tell the difference. Whether this is achieved by replicating the human brain, or in any other way, was not important for Turing.

Flying Different than Birds

In the literature on AI, the possible advances of the field are compared to other technologies like aeroplanes. Early models tried to simulate birds, while in the end, airplanes manage to fly in a very different way. One aim of Turing’s article was to shift the focus from a general and teleological debate to the actual problems to be solved. According to his approach, there is no great general solution to the question to what AI can achieve in the future, but there are many small improvements to machines.

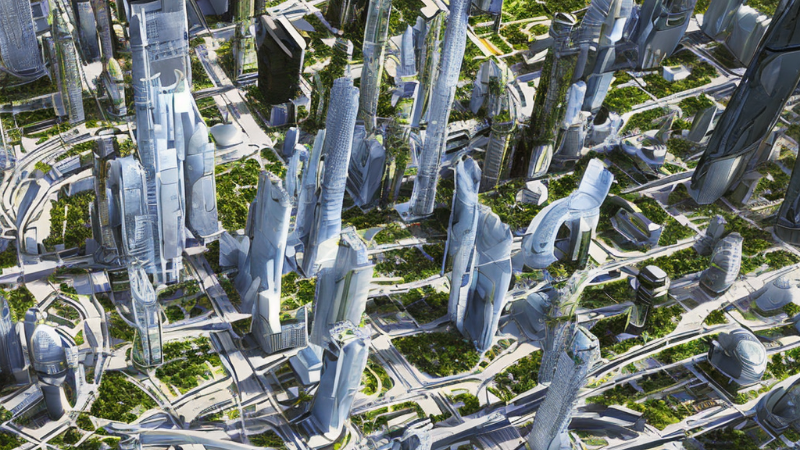

There might be a day when we suddenly realize that in many respects the line between humans and machines is blurred. Games like chess or Go are examples of problems in which machines have surpassed humans. If this trend continues, it might give a completely different connotation to the term digital society. While we cannot say that we are there yet, does that mean it can never happen? Try “bot or not”, an adaptation of the Turing test for poems. You will find that even today, it can be tricky to distinguish machines from human beings.

If you are interested in submitting a piece yourself, send us an email with your suggestions.

This post represents the view of the author and does not necessarily represent the view of the institute itself. For more information about the topics of these articles and associated research projects, please contact info@hiig.de.

Data governance

Sign up for HIIG's Monthly Digest

and receive our latest blog articles.

Making Sense of the Future: New brainteasers for digital futures in the classroom

Explore “Making Sense of the Future”, an open educational resource combining futures studies and creative exploration to reimagine our digital futures.

Honey, we need to talk about the future

Can futures studies challenge the status quo beyond academia and approach public dialogue as an imaginative space for collective endeavours?

Exploring digitalisation: Indigenous perspectives from Puno, Peru

What are the indigenous perspectives of digitalisation? Quechuas in Peru show openness, challenges, and requirements to grow their digital economies.