Unsere vernetzte Welt verstehen

Deep Fakes: die bisher unheimlichste Variante manipulierter Medieninhalte

In einer Zeit, in der wir uns in Bezug auf unseren Realitätssinn und unsere Vorstellung von uns selbst so sehr auf die Medien verlassen, ruft ein Phänomen wie Deep Fakes zwangsläufig Unbehagen hervor: eine perfekte Illusion, die das Fremde in das Alltägliche projiziert. Deep Fakes sind nicht die ersten manipulierten Medieninhalte. Was also löst dieses außergewöhnliche Gefühl der Unheimlichkeit aus, das wir mit ihnen verbinden?

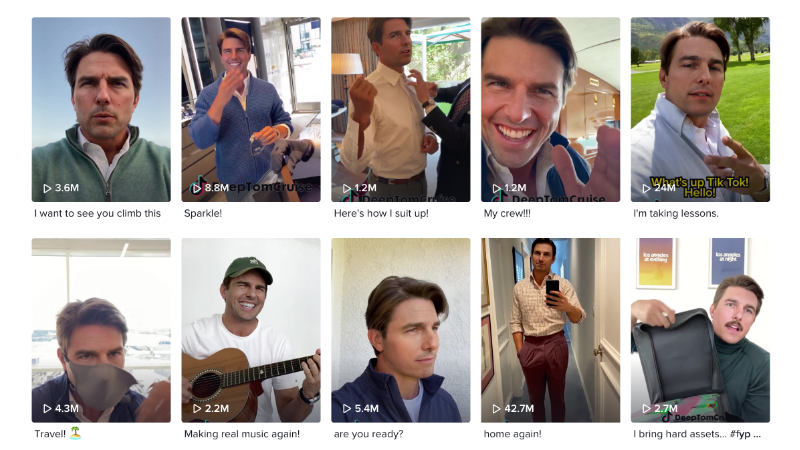

In early 2021, fake videos featuring a bogus version of actor Tom Cruise circulated on social media alongside comments that either praised their quality or lamented their worrisome perfection. These videos involved whole body movements (including Cruise’s characteristic mannerisms), which were performed by another actor for this purpose. At first sight, the short-lived attention given to the counterfeit Tom merely illustrates that deep fakes are becoming increasingly common. So why was this collectively regarded as something noteworthy at all? The answer probably has to do with the performance in the videos, which broke with the expected deep fake aesthetics that usually focuses on the face alone. A whole body copy of a well-known individual, however, represented another giant step towards an unrecognisable and hence troubling illusion.

Manipulated media content is a historically consistent occurrence

Despite the impression one can easily get from these perfect simulations, practices of manipulated content in different media forms have a long tradition, of which deep fakes are only one of the most recent variations. More generally, the term deep fake refers to the use of machine learning (deep learning) to create simulated content. It pretends to give a truthful depiction of the face, and sometimes also of the voice, of a real person. The increasing relevance of deep fakes comes from the combination of the relatively inexpensive access to these technologies, which has coincided with a discomforting rise of misinformation campaigns on social media platforms.

Deep fakes are commonly associated with the communicative intention to deceive and to potentially manipulate. They raise concerns about personal rights or the consequences for mediated realities, including public discourses, journalism and democratic processes. In essence, they are seen by some as nothing less than a “looming challenge for privacy, democracy, and national security” (Chesney and Citron 2019). Interpretations of these developments often include two very familiar generalization: dramatic accounts of what is new and dangerous about an evolving technology (e.g. Greengard 2020) are put into perspective by those approaches, which emphasise that there is “nothing new here” and instead demand a shift in focus towards the underlying social structures (Burkell/Gosse 2019). Between these two perspectives, which are tilted either towards technological determinism or social constructionism, it is necessary to find middle ground. This middle ground should take into account the recurring motifs commonly associated with the rise of any (media) technology, but at the same time emphasise some defining characteristics of deep fakes that make them a powerful tool of deception.

In other words: What is actually new about the deep fake phenomenon? Taking a historical perspective, it is pretty obvious that the practices of manipulating content in order to influence public opinion far predates digital technologies. Attempts to manipulate images are as old as photography itself. With the most recent technological era, the critical stance on simulated content is even fundamentally ingrained in debates on digital media, with simulation being one of their core characteristics. Questions on the loss of authenticity and auctorial authority – similar to the current anxieties voiced around deep fakes – were also habitually raised with previous media technologies, digital photography in particular (Lister 2004). When stylising the digital photo, the dubitative, the profound and inescapable doubt of what we see in it, lies at the core of its aesthetics (Lunenfeld 2000). Even though all its possibilities for easily altering each pixel independently are bringing it closer to a painting than a representation of reality.

At the same time, and irrespective of these oftentimes sinister undertones of manipulation, various types of computer-generated imagery (CGI) have long been applied in the creative industries. They are providing elaborate visuals in films (Bode 2017), so-called photo realism in virtual reality environments or life-like avatars in computer games by means of performance capture technologies (Bollmer 2019). These historical predecessors of doctored content and simulation aesthetics resonate well with the idea of historical continuity and contradict a stance that regards deep fakes as a major disruption. While these analogies certainly have a point, they also have a tendency to disregard today’s radically different media environments, in particular their fragmentation (e. g. Poell/Nieborg/van Dijck 2019).

So what’s new about the deep fake phenomenon?

When addressing the question of what makes deep fakes different from previous media phenomena, one could point to a combination of three factors. The first is the mentioned fragmented media environment, which is the direct result of the business models social media platforms thrive on. Their consequences are felt in traditional journalism, which has been drained financially, as well as in an increasing formation of mini publics that are even reduced to personalised feeds that often lack proper fact checking. This does not just make it easier for misinformation to spread online; the personalised content also allows a form of communication that is often shaped by a high degree of emotionalisation, with the potential to incite groups or individuals.

The second and third elements both cater to a specific aesthetic and only form an effective bond in combination: the suggestive power of audio-visual media and the moving image – still the one media form that supposedly offers the strongest representation of reality – is paired with the affective dimension of communication to which the human face is central. In other words, deep fakes evoke an extraordinary suggestive power by simulating human faces in action. Text-based media are usually met with a more critical distance by media literate readers or users – an awareness that increasingly extends to social media platforms and video sharing sites. This degree of media literacy, however, is challenged by the depiction of faces, especially those that are already known from other contexts. They are central to affective modes of communication and give pre-reflexive cues about emotional and mental states. The human face can even be regarded as the prime site of qualities. Trust and empathy, conveying truth and authenticity despite the cultural differences in how they and the emotions they convey are represented and interpreted.

Deep fakes and our sense of reality

This somatic dimension clearly hints at a complex relationship between technology, affect and emotion. Unsurprisingly, for individuals, some of the most feared consequences relate precisely to these affective and somatic dimensions of the technology. They can be directly linked to the fake content that is provided. A person’s real face and voice, for example, can be integrated into pornographic videos, evoking real feelings of being violated, humiliated, scared or ashamed (Chesney and Citron 2019: 1773). In fact, most deep fake content is pornographic (Ajder et al. 2019). This complex relationship between technology, affect and emotion gains even more relevance. When we consider the fact that the content provided by and the interactions facilitated on social networks are increasingly perceived as social reality per se, as part of a highly mediated social life. This is why digital images and videos affect both the individual and private idea of the self and the social persona of the public self (McNeill 2012). Both are part of a space that is open, contested and hence, in principle, very vulnerable. Identifying with digital representations of the self can even evoke somatic reactions to virtual harm, such as rape or violence that is committed against avatars (cf. Danaher 2018). It is hardly surprising that the debates around deep fake videos clearly express these enormous social and individual anxieties concerning online reputation and the manipulation of individuals’ social personas.

Of course, the suggestive power of these videos bears obvious risks for an already easily excitable public discourse. The textbook example being a fake video of inflammatory remarks by a politician on the eve of election day. By fuelling the fires of uncertainty in our mediated realities, they can easily be seen as exacerbating the fake news problem. The question, however, of why deep fake videos create such considerable unease, exceeds this element of misinformation. It is strongly related to this eerie resemblance to reality that leaves us guessing as to whether or not to trust our senses. It is an uncanniness, in a Freudian sense, of categorical uncertainty about the strange in the familiar. We are fascinated by the illusions deep fakes create for us; they evoke amusement. At the same time, though, they remind us that our mediated realities can never be trusted at face value. Affecting us on a somatic level, deep fakes make us more susceptible to what they show, but in the end only to urge us to doubt what we actually see.

This is exactly why, contrary to all the grim forebodings,this could all actually turn out to be a good thing in today’s media environment of competing realities (with some, however, being much more trustworthy than others). It is a lucid reminder of the age-old insight that things are not always what they seem, even though, alas, first impressions deceive many.

References

Ajder, H., Patrini, G., Cavalli, F. & Cullen, L. (2019). The State of Deepfakes: Landscape, Threats, and Impact. Deeptrace. https://regmedia.co.uk/2019/10/08/deepfake_report.pdf

Bode, L. (2017). Making believe: Screen performance and special effects in popular cinema. Rutgers University Press.

Bollmer, G. (2019). The kinesthetic index: video games and the body of motion capture. InVisible Culture Journal 30.

Burkell, J., & Gosse, C. (2019). Nothing new here: Emphasizing the social and cultural context of deepfakes. First Monday. Journal on the Internet, 24 (12). https://doi.org/10.5210/fm.v24i12.10287.

Chesney, B. & Citron, D. (2019). Deep fakes: a looming challenge for privacy, democracy, and national security. California Law Review 107 (6): 1753-1820.

Danaher, J. (2018). The law and ethics of virtual sexual assault. In W. Barfield & M. Blitz (Eds.). The Law of virtual and augmented Reality. Edward Elgar Publishers, pp. 363-388.

Greengard, S. (2020). Will deepfakes do deep damage? Communications of the ACM, 63 (1), 17–19. DOI: 10.1145/3371409.

Lister, M. (2004). Photography in the age of electronic imaging. In L. Wells (Ed.). Photography: A Critical introduction. Routledge, 295-336.

Lunenfeld, P. (2000). Digital photography: The dubitative image. In P. Lunenfeld (Ed.). Snap to Grid: A User’s Guide to Digital Arts, Media, and Cultures. MIT Press, 55-69.

McNeill, L. (2012). There is no ‘I’ in network. Social networking sites and posthuman auto/biography. Biography 35 (1): 65-82.Poell, T., Nieborg, D. & van Dijck, J. (2019). Platformisation. Internet Policy Review, 8 (4). DOI: 10.14763/2019.4.1425

Dieser Beitrag spiegelt die Meinung der Autorinnen und Autoren und weder notwendigerweise noch ausschließlich die Meinung des Institutes wider. Für mehr Informationen zu den Inhalten dieser Beiträge und den assoziierten Forschungsprojekten kontaktieren Sie bitte info@hiig.de

Jetzt anmelden und die neuesten Blogartikel einmal im Monat per Newsletter erhalten.

Künstliche Intelligenz und Gesellschaft

Raus aus dem Digitalisierungsstau: Data Governance bringt Städte und Gemeinden auf die digitale Überholspur

Der Data Governance Wegweiser unterstützt Verwaltungen, digitale Lösungen effektiv umzusetzen.

Netzecho: Reaktionen auf die Tagesschau in Einfacher Sprache

Seit 2024 gibt es die Tagesschau in Einfacher Sprache. Wie wird das neue Nachrichtenformat von Nutzer*innen im Internet diskutiert?

Chancen gegen Einsamkeit: Wie Pflegeeinrichtungen das Quartier vernetzen

Was hilft gegen Einsamkeit im Alter? Pflegeeinrichtungen schaffen neue Räume für Gemeinschaft und digitale Teilhabe.